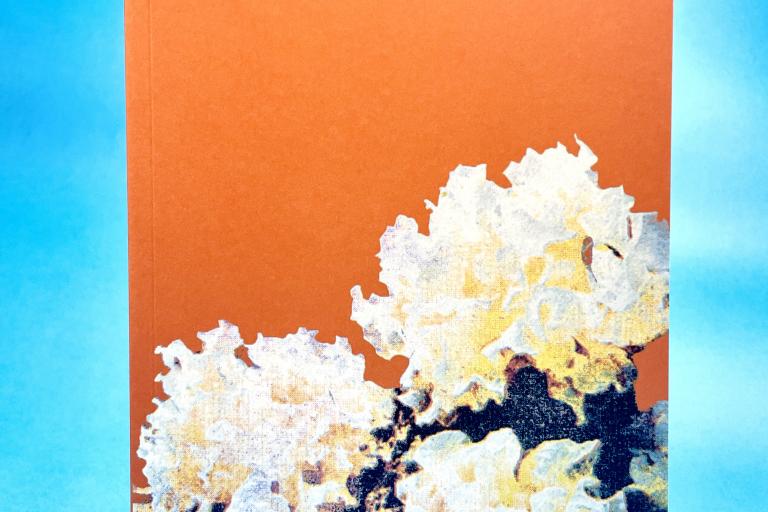

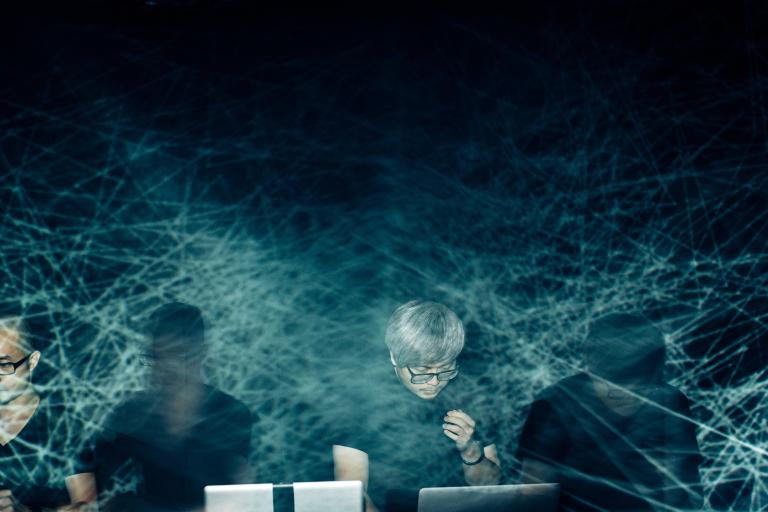

Based in London and Berlin, James Ginzburg and Paul Purgas have consistently applied new tools in the creation of their work. In producing their seventh album, Blossoms, the duo has plunged itself into neural network intelligence systems. The systems themselves are as disorientated as the human directors in negotiating their way through this uncharted process. Just as humans have collaborated with animals and nature to create art, can we conceive of AI in a similar way?

Developed through a patient and painstaking process of testing and training, Blossoms may truly be the world’s first album by artificial intelligence. Dark, abrasive, and alien, it makes for an unsettling listen. Perhaps this is the unfolding of new artistic thinking.

Tobias Fischer: After your experiences with Blossoms, do you think there is a possibility for a unique, AI-based kind of creativity?

Emptyset:

Machine learning systems have been used to find patterns within huge data sets pertaining to scientific research.

Incredible results seem to have been found in certain cases, only for the scientists to discover later that, while a certain kind of pattern had emerged in the data, it was not actually a pattern that had relevance to their research and could be at times a dangerous distraction in the case of something like pharmaceutical or medical research.

This suggests that there is potential harmony within data sets that is, one could say, ornamental rather than useful.

Within the data set of the audio we trained the system on, it seemed to find patterns within the information that wouldn’t be what we would think of as musically relevant data.

TF: But nevertheless, it created sound using it.

E: Yes. And it resulted in a bizarre and alien logic within the results.

TF: Let’s talk about the term artificial intelligence in relation to creativity for a second. What, would you say, does ‘intelligence’ mean when it comes to making music?

E: In the context of our approach, we understood ‘intelligence’ to mean a system that could perceive and categorize patterns in a data set.

For example, what the quality of woodenness in the recordings of us playing wood is, or what our back catalog's unifying sonic or rhythmic characteristics are. And then to make new data and expressions derived from this ‘understanding’.

In a sense, rather than trying to use a system to create realistic results, we wanted to provoke it in such a way that you could hear the artifacts of its thought process.

TF: If you want a machine to come up with original, complex structures of audio, I would assume that you need to teach it your own values of what you consider original, while allowing it to develop its own creativity. How does this work in practice?

E: We were looking for results that had stepped beyond the parameters of imitation and mimicry of the source material, and had begun to embody new forms and structures that felt like sonic expressions in their own right.

In practice, the system was initially trained on the premise of musicality through a large pool of audio information, which was grounded using a library data set that had an idea of musical structure and expression.

This is a readymade library system that is currently used by several platforms for neural network–based audio synthesis to present an essential distillation of the idea of music itself.

Once this was in place, and the system had a foundational idea of what music might be, we then began the process of training it with our own material.

TF: How did that work, concretely?

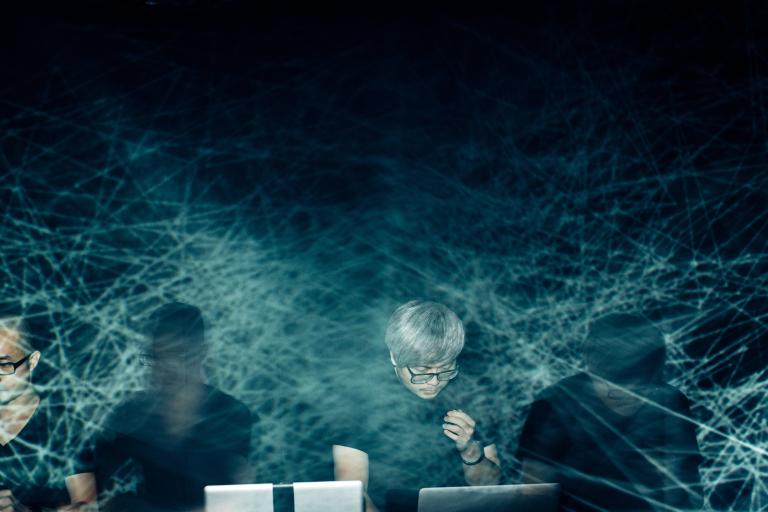

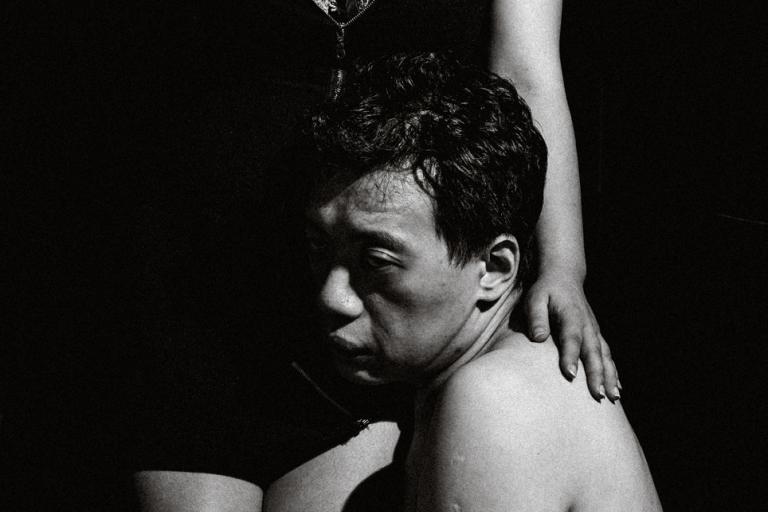

E: We fed the system our back catalogue and three days worth of improvised percussion performances on pieces of wood and metal.

These were cut into one- to five-second pieces, which were all tagged with metadata to describe the qualities of each slice of audio: for example, its material, its complexity, volume, frequency, brightness etc.

As a result, the system could try and understand what those qualities meant inherently so that it could synthesize new material based on that understanding.

TF: How would you describe the learning curve?

E: When we first started, it was really almost impossible to imagine or have any expectations for what the results might be like or what an interaction could be. The neural network models produced such low quality audio during the first year of testing that it was initially more about seeing if the idea could even be realized.

It was only through a decisive breakthrough that we started to hear anything that resembled workable sonic results.

From this point, once we built a viable system, it was more about finding what points within the machine-learning process would yield the results that most appealed to us or felt like they had the most imaginary potential.

It was far more interesting to hear it developing its thinking and to understand something of how it organized ideas and derived a sense of musicality than to hear its conclusions. Towards the end of the process, when the system had extensive time to think, the results became less engaging.

TF: In which way did they become less engaging?

E: We realized we were more engaged by the system’s failure to mimic our work than its success, because in its failure something of the uncanniness in the process is revealed.

The system thinks in a kind of spiral, developing complexity slowly over rounds of incrementally increasing resolution.

In this spiral you find that it produces certain phrases that iterate. Sometimes that iteration can be once every ten minutes of audio. Over time it might start to morph into and combine with other phrases that the system, for whatever reason, becomes fixated on.

So although we ended up with over 100 hours of outputs to go through, there were only so many sonic thematic clusters.

It was easy to discard a lot of the early phases of the system’s thinking. Noticeably, towards the end of the month or so, as the system was still running and developing its model, the results became too good — in the sense that they were accurately creating sounds that so closely matched our source material that they weren’t interesting for us.

TF: In a way, I suppose, this was bound to happen. After all, you trained the machine with your own music. So the results are giving you a new perspective on your own creativity, as reflected through the eyes of an AI.

E: What was interesting for us was how the thought processes of the system were imprinted in the material it outputted. In hearing the way that the system interpreted that training data, we could hear the distance between source and result, and that distance was the exact process of machine cognition and pattern recognition.

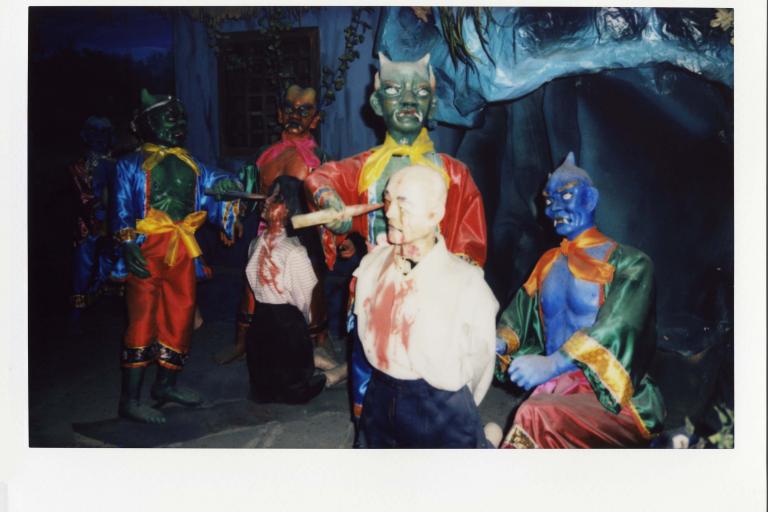

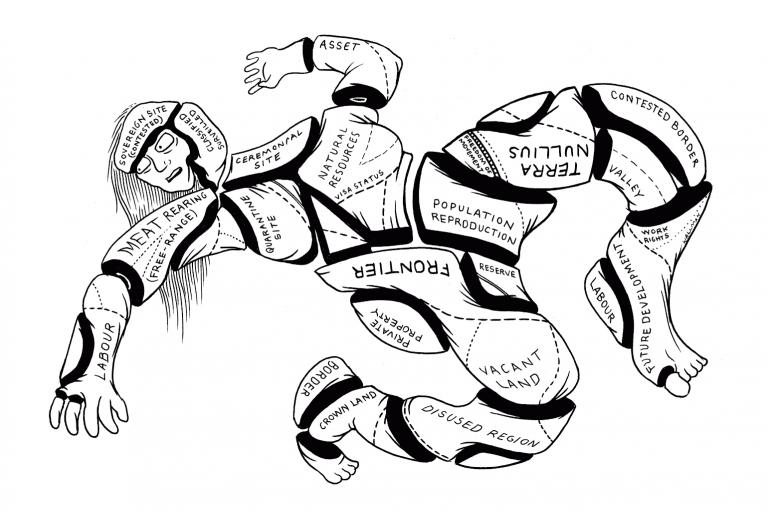

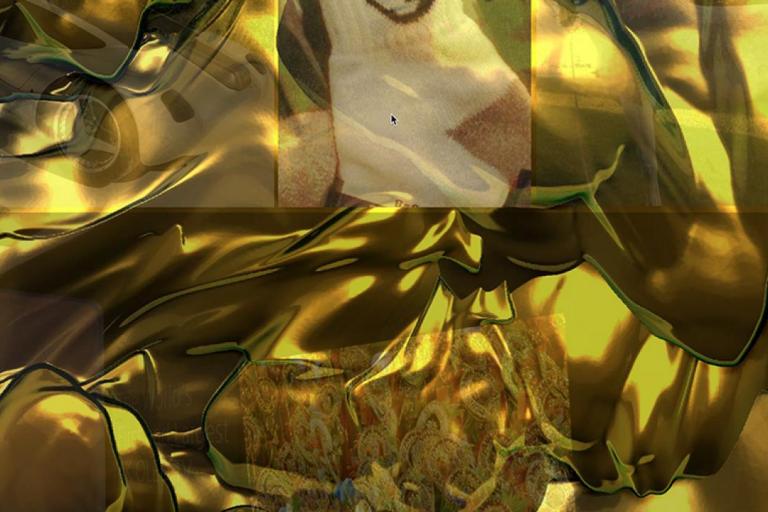

If you saw some of the deep thought images that were produced, they make you much more aware of how bizarre these processes are, rather than necessarily telling you something about the source material.

It also demonstrates that the apparent stability and logic of human pattern recognition is only one form of human data processing. For example, when people use psychedelic drugs, the processing of data changes, and people interpret their sensory experience in other ways.

TF: A review in The Quietus described the learning curve as the AI “gaining confidence”. Did it feel like that to you?

E: It felt more like the system gained a greater command of a sonic language.

It evolved from repetition, mimicry, and getting caught into cognitive loops, to eventually beginning to step out of this phase with ideas and expressions of its own.

We tried not to anthropomorphize or impose human characteristics onto the AI in order to try to retain some clarity about what we were perceiving. Nonetheless, it certainly had parallels to emergent life in the development of its learning process; that was clear.

It will be interesting to see, as these systems develop and emerge over the next years, if it will be so easy to maintain a clear objective position about how one can interpret these types of developmental behaviours.

TF: The press release calls the musical results “inspiring” and “disconcerting”. In which way were they disconcerting?

E: The results were disconcerting because they exceeded our expectations of what we thought an artificial intelligence–based system could synthesize.

After there was a large incremental jump in the quality and complexity of the outputs, our first reaction was to be excited to hear results that were sonically interesting and, while related to the source material, had a logic of their own.

But almost immediately the excitement subsided when you start to consider that anyone can train one of these systems on our body of work and synthesize new material.

It is disconcerting, because it opens up whole new conversations about authorship, and it suggests we are heading into unclear territory around what the future of music composition will be.

TF: But as producers, you still had to arrange the results of the AI into a listenable whole. The network isn’t outputting finished albums.

E: True, within the context of Blossoms, we still needed to arrange the outputs to make them have a structural logic that could be listened to as full pieces. However, towards the end of the process, our system was outputting longer passages that had something like a musical structure.

It’s apparent that when a system like Spotify opens up to being a training library for AI systems, then you could synthesize new music just by inputting acts that you liked and making hybrids. And then you could start making hybrids out of the hybrids.

It seems like we are now only a few years away from systems that could realistically synthesize, from the ground up, hybrids of whatever music they were trained on. And potentially they won’t need direct human intervention anymore.